|

Hi, I'm Sayan — a Robotics Engineer with a passion for learning-based control and intelligent decision-making. I specialize in building real-world robotics systems that combine perception, motion planning, and reinforcement learning to solve complex tasks. I recently completed my M.S. in Robotics at Carnegie Mellon University Robotics Institute, where I worked on skill-based planning, deep reinforcement learning advised by Prof. Maxim Likhachev. Before CMU, I earned an M.S. in Mechanical Engineering at UC San Diego, where I explored mechanical intelligence and autonomy — contributing to research in the Gravish Lab and autonomous driving work under Prof. Henrik I. Christensen at the Contextual Robotics Institute. Currently, I’ve been working as a Robotics Engineer at Dexmate, a robotics startup. There, I led the development of vision-based reinforcement learning pipelines, hybrid control systems (classical + learned), and scalable sim-to-real workflows for complex manipulation tasks using bimanual robots. I'm now looking for roles where I can bring together my expertise in robotics, deep learning, and control — whether it's developing RL policies for real-world agents, integrating perception into control loops, or scaling learning systems for manipulation and mobility. If you’re working on the next generation of intelligent embodied agents — let’s connect. Email / Google Scholar / LinkedIn / CV |

|

B.E. Mechanical Engg.

|

M.S. Mechanical Engg.

|

M.S. Biomedical Engg.

|

M.S. Robotics

|

Robotics Engineer

|

|

|

|

|

|

Sayan Mondal, Committee in charge: Dimitrios (Dimi) Apostolopoulos (co-advisor), Maxim Likhachev (co-advisor), Rishi Veerapaneni MSR Thesis, 2024 Developed S3D-OWNS, a hybrid planning framework that integrates a high-level geometric planner with a generalist deep reinforcement learning (DRL) locomotion policy for efficient quadruped navigation. The system enables long-horizon planning while leveraging agile, learned behaviors for real-time maneuvers like walking, jumping, and climbing. It uses terrain-aware cost predictors and a traversability-aware planner to optimize energy, time, and success rate. Demonstrated significant performance gains in cluttered 3D environments using the Unitree Go1 quadruped, showing strong generalization and efficiency across tasks and terrains. |

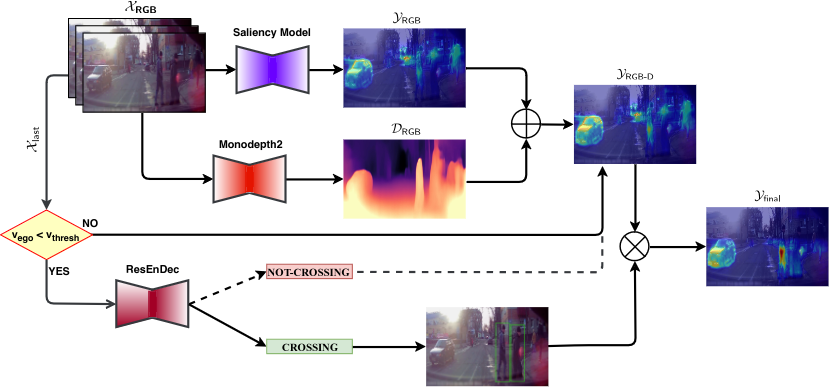

SAGE-Net framework |

Anwesan Pal, Sayan Mondal, Henrik I. Christensen IEEE / CVF Computer Vision and Pattern Recognition Conference, 2020 website In recent years, predicting driver’s focus of attention has been a very active area of research in the autonomous driving community. Unfortunately, existing state-of-the-art techniques achieve this by relying only on human gaze information, thereby ignoring scene semantics. We propose a novel Semantics Augmented GazE (SAGE) detection approach that captures driving specific contextual information, in addition to the raw gaze. Such a combined attention mechanism serves as a powerful tool to focus on the relevant regions in an image frame in order to make driving both safe and efficient. Using this, we design a complete saliency prediction framework - SAGE-Net, which modifies the initial prediction from SAGE by taking into account vital aspects such as distance to objects (depth), ego vehicle speed, and pedestrian crossing intent. Exhaustive experiments conducted through four popular saliency algorithms show that on 49/56 (87.5%) cases - considering both the overall dataset and crucial driving scenarios, SAGE outperforms existing techniques without any additional computational overhead during the training process. The augmented dataset along with the relevant code are available as part of the supplementary material. |

|

Sayan Mondal, Advisor: Nicholas G. Gravish Committee in charge: Nicholas G. Gravish (chair), Tania Morimoto, Michael T. Tolley MS Thesis, 2020 This masters thesis describes a novel underactuated robotic microgripper with two fingers.The design specifications, a thorough kinematic description of the gripper, and its static analysis are presented. The novelty of this gripper lies in the simplicity of its mechanism that can accomplish the task of picking up the target objects. What makes it unique is its ability to grasp objects that are either in the same plane as that of the gripper or are at a lower level. The gripper is equipped to be actuated by a single actuator. For preliminary evaluation of the gripper’s object manipulation capabilities, standard hexagonal nuts with varying weights, and sizes were selected.The success of grasping the nuts by the gripper at two different orientations were observed and studied. In this paper only one of the test cases has been shown in detail. In addition to that, a kirigami spring has been incorporated in the modified design of the gripper in order to enhance its grasping capabilities. |

|

|

|

Sayan Mondal Personal Project I built a physics-realistic peg-in-hole manipulation system in MuJoCo using a Robotiq 2F-85 gripper from the mujoco_menagerie and two meshes (peg and cuboid with hole). To keep control explicit and reproducible—no mocap—the gripper mounts on a custom 6-DoF base (three prismatic slides + one ball joint) with a tendon-actuated jaw. A compact Cartesian controller tracks position on the slides and orientation via body-fixed angular-velocity control with torque limits. Contact modeling is tuned per geometry (friction and solref/solimp), and the non-convex hole block is represented by convex parts for robust collision. The system supports both intuitive teleoperation and a scripted finite-state agent (approach → grasp → lift → rotate ~95° → align → release). Randomized placement, runtime speed control, and structured logging (poses, jaw separation, filtered contact forces) make experiments repeatable and debuggable. The result is a stable, re-implementable baseline that reliably handles tight-tolerance alignment and insertion while exposing a simple API: 6-D pose + one jaw command. Code is not publicly hosted—contact me for access. |

|

Sayan Mondal, Praveen Venkatesh, Siddharth Saha, Khai Nguyen 16-831: Introduction to Robot Learning In the realm of deep reinforcement learning, achieving generalization over unforeseen variations in the environment often necessitates extensive policy learning across a diverse array of training scenarios. Empirical findings reveal a notable trend: an agent trained on a multitude of variations (termed a generalist) exhibits accelerated early-stage learning, but its performance tends to plateau at a suboptimal level for an extended period. In contrast, an agent trained exclusively on a select few variations (referred to as a specialist) frequently attains high returns within a constrained computational budget. To reconcile these contrasting advantages, we experiment with various combinations of specialists and generalists in the quadrupedal locomotion setting. Our investigation delves into determining the impact of each skill when they are trained to be specialists and the impact of combining them together into creating a more generalist agent. |

|

Ballbot with 7DOF arms navigate and is capable of wall pushing |

Juan Alvarez-Padilla, Christian Berger Sayan Mondal, Haoru Xue, Zhikai Zhang 16-745: Optimal Control and Reinforcement Learning slides Several ballbots have been developed, yet only a handful have been equipped with arms to enhance their maneuverability and manipulability. The incorporation of 7-DOF arms to the CMU ballbot has presented challenges in balancing and navigation due to the constantly changing center of mass. This project aims to propose a control strategy that incorporates the arms dynamics. Our approach is to use a simplified whole-body dynamics model, with only the shoulder and elbow joints moving for each arm. This reduces the number of states and accelerates convergence. We focused on two specific tasks: navigation (straight and curved paths) and pushing against a wall. Trajectories were generated using direct collocation for the navigation task and hybrid contact trajectory optimization for pushing the wall. A time-variant linear quadratic regulator (TVLQR) was designed to track the trajectories. The resulting trajectories were tracked with a mean-average error of less than 4 cm, even for the more complex path. These experiments represent an initial step towards unlocking the full potential of ballbots in dynamic and interactive environments. |

|

Logic Geometric Programming to solve relatively long-horizon TAMP problems |

Shivam Vats, Sayan Mondal 16-748: Underactuated Robotics Sequential manipulation tasks are notoriously hard to plan for because they involve a combination of continuous and discrete decision variables. In this project we study and experimentally analyze Logic Geometric Programming (LGP), which is a trajectory optimization based approach to solve such problems. |

|

Multi-object tracking using Deep-SORT for waste-tracking |

Sayan Mondal, Howie Choset Matthew J. Travers, Graduate Research Assistant at Biorobotics Lab This work presents a comprehensive approach to waste classification on a moving conveyor system, with a particular emphasis on leveraging sequential models to capture the spatial and temporal components inherent in the sequence of images. The utilization of sequential models contributes to enhanced stability and reliability in the classification results. Additionally, a robust multi-object tracking system has been developed to monitor the trajectory of waste materials across diverse conveyors, leveraging data from multiple cameras. The study's key collaboration was with Gateway Recycling facility, specializing in paper and plastic waste commodities. The project's success has paved the way for a significant collaboration with the recycling facility, contributing valuable insights and advancements in waste management practices. Furthermore, the research team, led by me, spearheaded an initiative for recycling facility automation. A team of five graduate students was assembled to automate the data-collection pipeline. This involved the development of an efficient system capable of identifying various grades of paper and plastic. Notably, the system addressed the challenge of differentiating between grades that even facility workers found visually challenging. The outcomes of this work showcase the potential of advanced technologies in waste management and underscore the importance of automation in streamlining recycling processes. The success of the project holds promise for improving the efficiency and accuracy of waste classification systems in recycling facilities, contributing to sustainable waste management practices. |

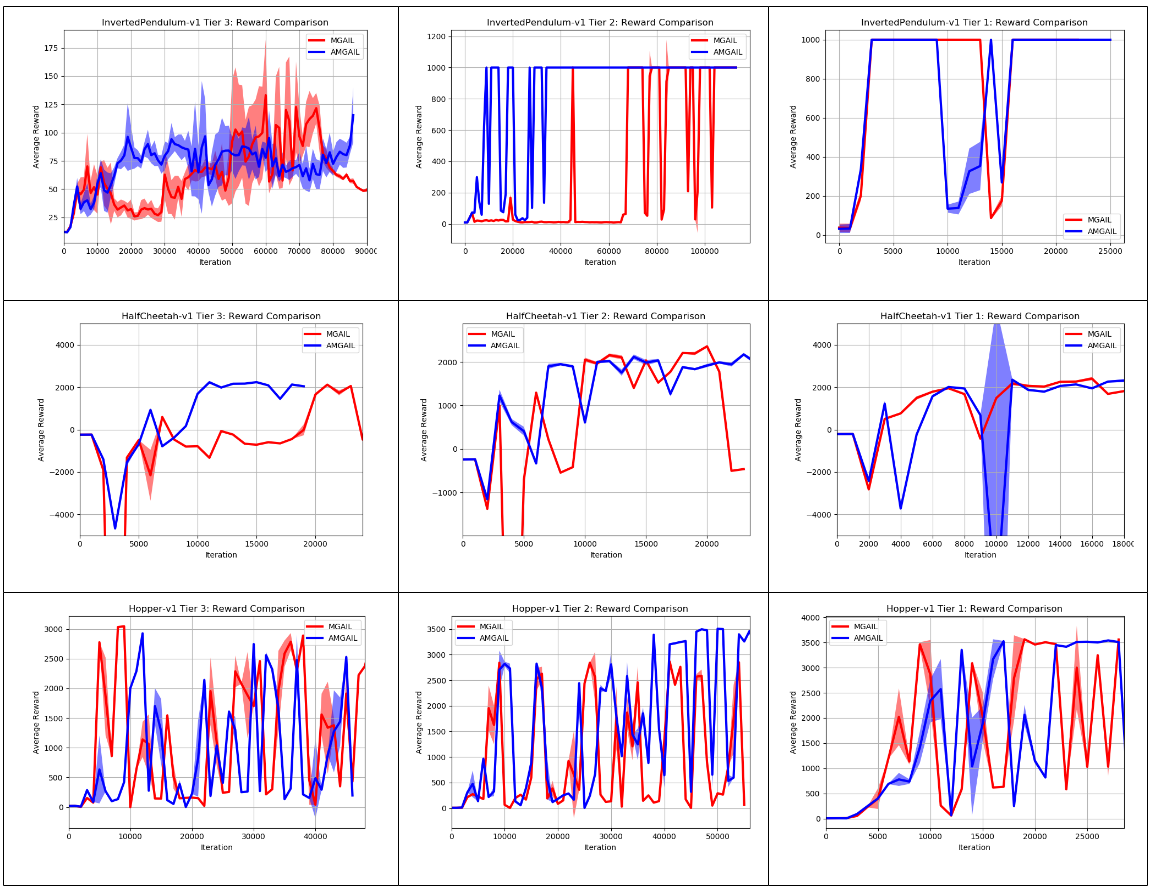

MGAIL vs AMGAIL |

Sayan Mondal, David Orozco, Nicholas Ha ECE 276C: Robot Reinforcement Learning In this paper we introduce a new concept named, AMGAIL to solve the imitation learning problem in an environment in which rewards are available. AMGAIL is based on MGAIL, but it replaces bad expert trajectories with good ones that we generate. We make use of the total rewards of the trajectories to detect how good or bad they are. We tested for 3 MuJoCo environments- Hopper-v1, HalfCheetah-v1, InvertedPendulum-v1. We expect that AMGAIL should perform better than vanilla MGAIL when the expert trajectories are a mix of experts of varying level because the algorithm is able to replace the weaker experts and in turn lower the variance. Our results generally confirm this. |

|

|

|

Sampling-based planners for 5-DOFs arm to move from its start joint angles to the goal joint angles. |

Point robot to catch a moving target in 2D grid worlds. (A* Search Algorithm) |

|

Single-view image to 3D reconstruction |

Neural Radiance Field and Neural Surfaces |